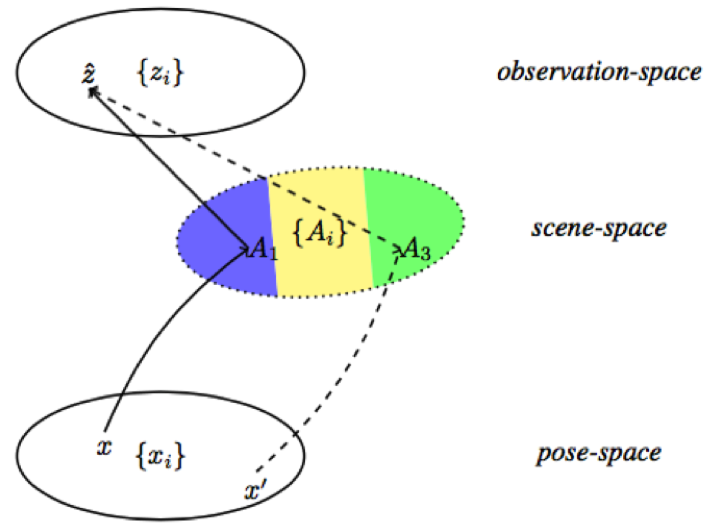

The ability to autonomously operate in an unknown, possibly dynamically changing environment is essential in robotics and additional application domains. To do so, the robot has to correctly perceive the environment and be capable of intelligently deciding its next actions. Yet, the world is often full of ambiguity, that together with other sources of uncertainty, make perception a challenging task. As an example, one might consider matching images from two different but similar in appearance places (possibly observed by different robots), or attempting to recognize an object that is similar in appearance, from the current viewpoint, to another object. Both cases are examples of ambiguous situations, where naive and straightforward approaches are likely to yield incorrect results, i.e. mistakenly considering the two places to be the same place, and incorrectly associating the observed object.

More advanced approaches are therefore required to enable reliable operation. These approaches typically involve probabilistic data association and hypothesis tracking given available data. Yet, robust perception approaches have been typically focused on the passive case, where robot actions are externally determined.

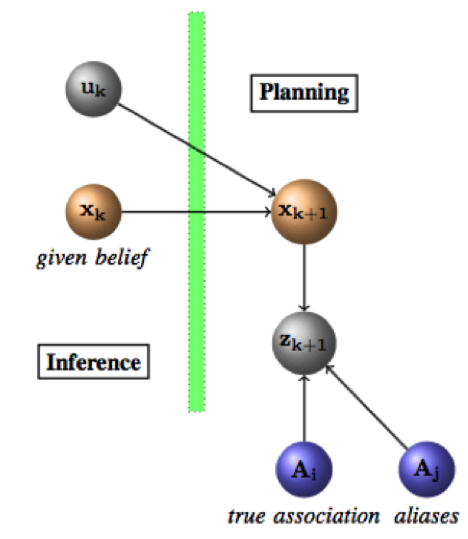

In contrast, in this research we investigate active robust perception approaches to determine robot future actions to improve certain aspects in robust perception. To do so, we incorporate data association aspects within belief space planning, while also considering additional sources of uncertainty (e.g. stochastic control, imperfect sensing and uncertain environments).

A possible application of such a concept is in the context of the kidnapped robot problem in ambiguous environments, e.g. robot navigation in a multi-story building with similar in appearance corridors (see image below).

Related Publications: link to bib file