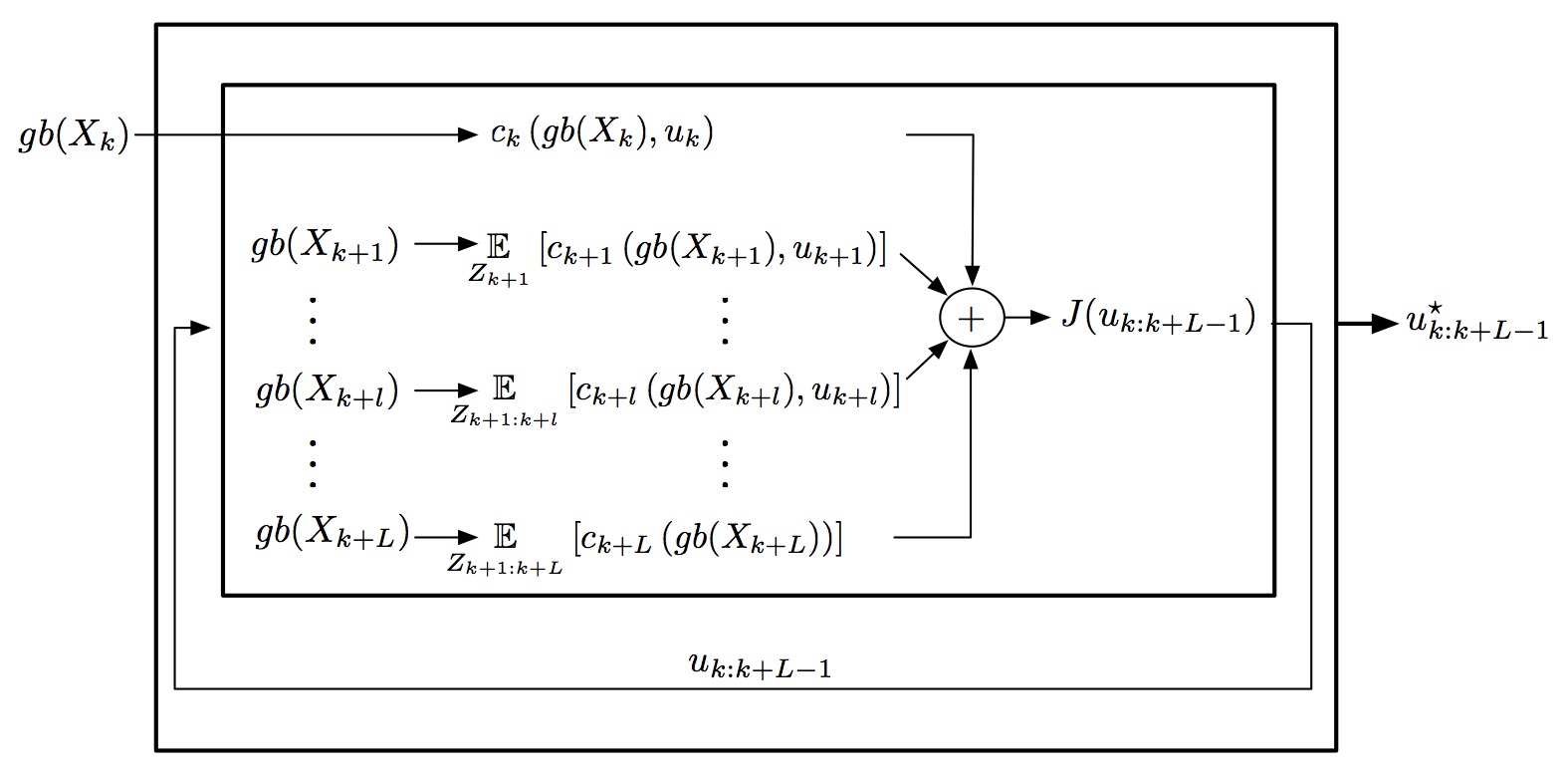

Reliable planning under uncertainty is crucial in many application endeavors in which the platform operates in full or partial autonomy, such as autonomous navigation and exploration, monitoring, surveillance and robotic surgery. Autonomous operation in complex unknown scenarios involves a deep intertwining of estimation and planning capabilities. The platform has to fuse sensor measurements in order to infer its state and to build a model of the surrounding environment. Moreover, accomplishing given goals with high accuracy and robustness requires accounting for different sources of uncertainty within motion planning. Consequently, planning should be done in the /belief space/, a problem also known as partially observable Markov decision process (POMDP).

In this research we develop approaches for planning under uncertainty when the environment model in which the platform operates is unknown or uncertain, and in lack of sources of absolute information (e.g. no GPS). We represent the platform state and the state of the surrounding environment within the /generalized/ belief space, and investigate approaches for planning in the continuous domain, avoiding discretizing the state and control space.

In this research we develop approaches for planning under uncertainty when the environment model in which the platform operates is unknown or uncertain, and in lack of sources of absolute information (e.g. no GPS). We represent the platform state and the state of the surrounding environment within the /generalized/ belief space, and investigate approaches for planning in the continuous domain, avoiding discretizing the state and control space.

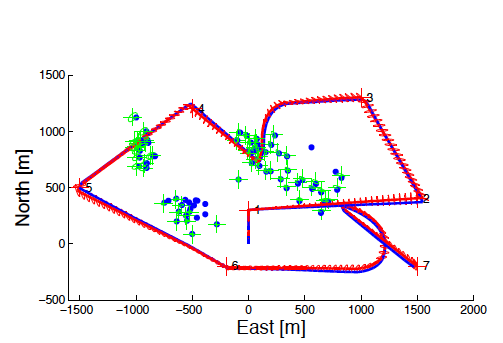

Below is a demonstration of this concept in an autonomous navigation scenario where the platform has to visit a number of goals (denoted by green color) while operating in unknown GPS-deprived environment. The planning algorithm tradeoffs between reaching the goals, control effort and uncertainty. Whenever uncertainty exceeds a threshold, the platform is guided towards previously observed regions to perform loop closure, thereby reducing uncertainty.